This templates uses our Bridge API command to connect to the local API of Ollama from where we are calling and using Llama3.2 (or any other LLM installed) to process data and generate content. In our example we are using a simple list of 10 keywords for which we want the Local AI to generate for us an SEO friendly description of about 50 words. But you can easily get it to generate a massive amount of articles of thousand words as Llama 3.2 is very fast and gives great results. Ollama supports Nvidia RTX cards but also AMD cards or you can run it on CPU only if you install a CPU version of Ollama.

Requirements: Before using this template you need to :

1) Download & install Ollama: https://ollama.com/download

2) Download Llama3.2 using this command line: ollama pull llama3.2

3) Launch Ollama server with this command line: ollama serve

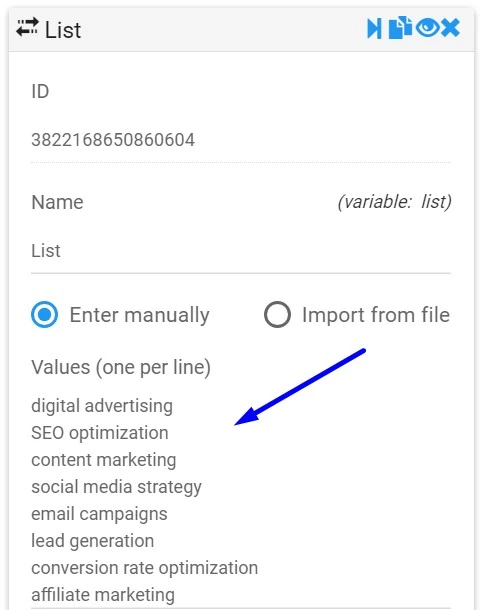

Update you keywords List

Explanations:

This List command creates a Loop that will go through all your keywords and load your current keyword value within the prompt syntax.

You can replace these keywords list with a list of Titles for a full blog article generation, as long as you enter one value per line the automation will continue to work.

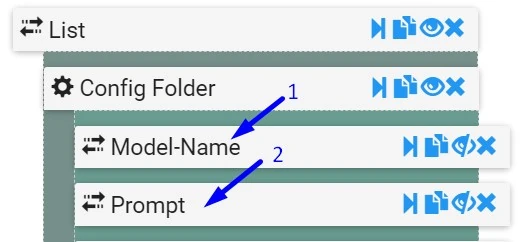

Update LLM Model & Prompt

Explanations:

You only have to change and configure 2 fields, which are:

1) Replace current value with the exact LLM model name you have pulled/installed into your Ollama, for instance we use:

llama3.2:3b-instruct-q4_K_M

2) : Replace the Prompt with your own prompt but keep referencing the the current value of their mother Loop called List, see syntax below:

Create a 50 words definition of this keyword {{List}}. Provide just the description without any comment nor explanation.